Overview

Dictionary-based and part-based methods are two of the most popular approaches for visual recognition. In both cases, a mid-level representation is built on top of low-level image descriptors and top-level classifiers use this mid-level representation to achieve visual recognition. While in current part-based approaches, mid- and top-level representations are usually jointly trained, this is not the usual case for dictionary-based schemes. A main reason for this is the complex data association problem related to the usual large dictionary size needed by dictionary-based approaches.

In this work we propose a novel hierarchical dictionary-based approach to visual recognition, that jointly learns suitable mid- and top-level representations. To achieve this, we use a group-sparsity regularization term that induces word sharing, specialization and compactness. Furthermore, by using a max-margin learning framework, our proposed approach directly handles the multiclass case at both levels of abstraction.

The Method

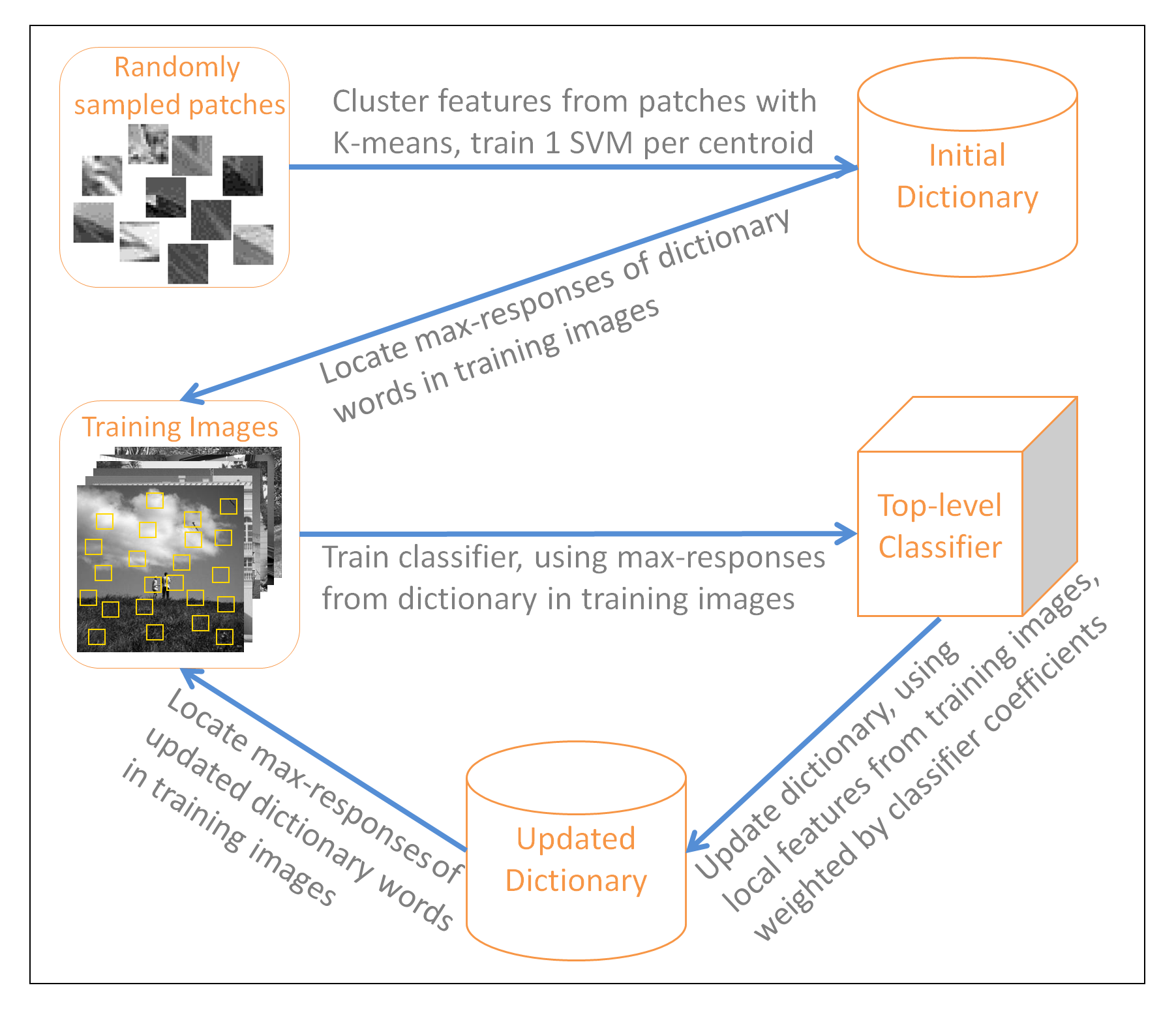

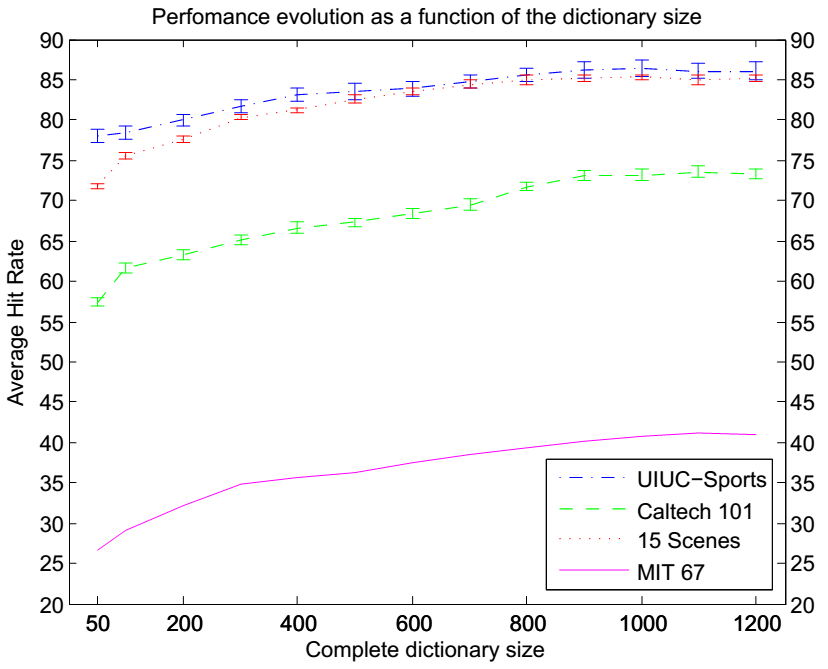

A high-level description of our method can be observed in the following figure. The top-level classifier and the mid-level dictionary are both modeled as a set of linear classifiers (SVMs).

We model images using a set of responses, obtained by performing the dot product between local feature descriptors (HOG+LBP) and the words of the visual dictionary. Following this, we encode each image following a max-pooling strategy, i.e., selecting the maximum responses for each visual word over a set of rectangular regions, e.g. a spatial pyramid.

Learning is performed using max-margin structured output techniques and alternating minimization. We estimate the top-level classifier parameters by means of a Structural SVM, while the visual dictionary parameters are learnt using a Latent Structural SVM.

We model images using a set of responses, obtained by performing the dot product between local feature descriptors (HOG+LBP) and the words of the visual dictionary. Following this, we encode each image following a max-pooling strategy, i.e., selecting the maximum responses for each visual word over a set of rectangular regions, e.g. a spatial pyramid.

Learning is performed using max-margin structured output techniques and alternating minimization. We estimate the top-level classifier parameters by means of a Structural SVM, while the visual dictionary parameters are learnt using a Latent Structural SVM.

Results

We performed quantitative and qualitative experiments on four standard benchmark datasets, Caltech 101, 15 scene categories, UIUC-Sports and MIT67.

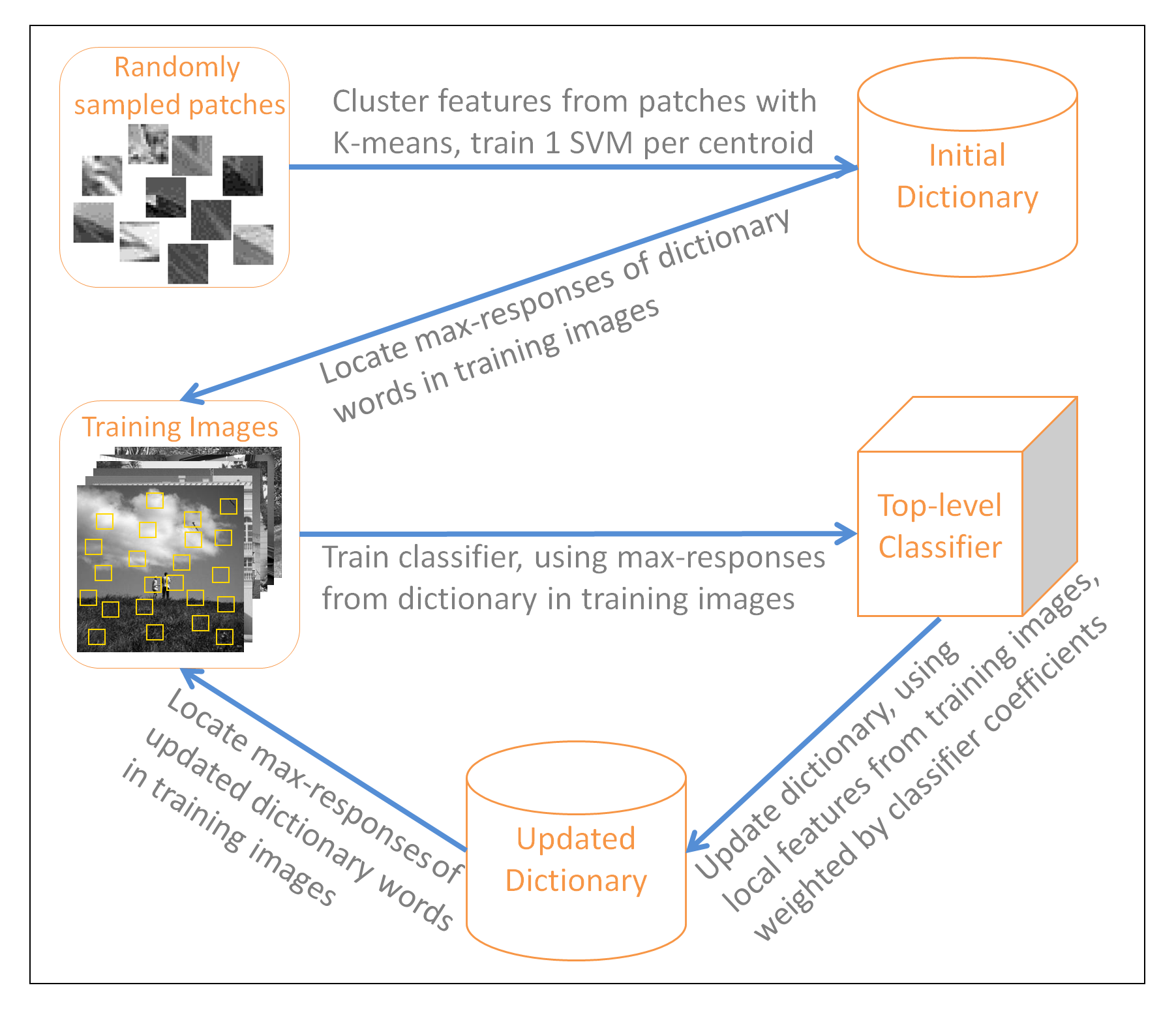

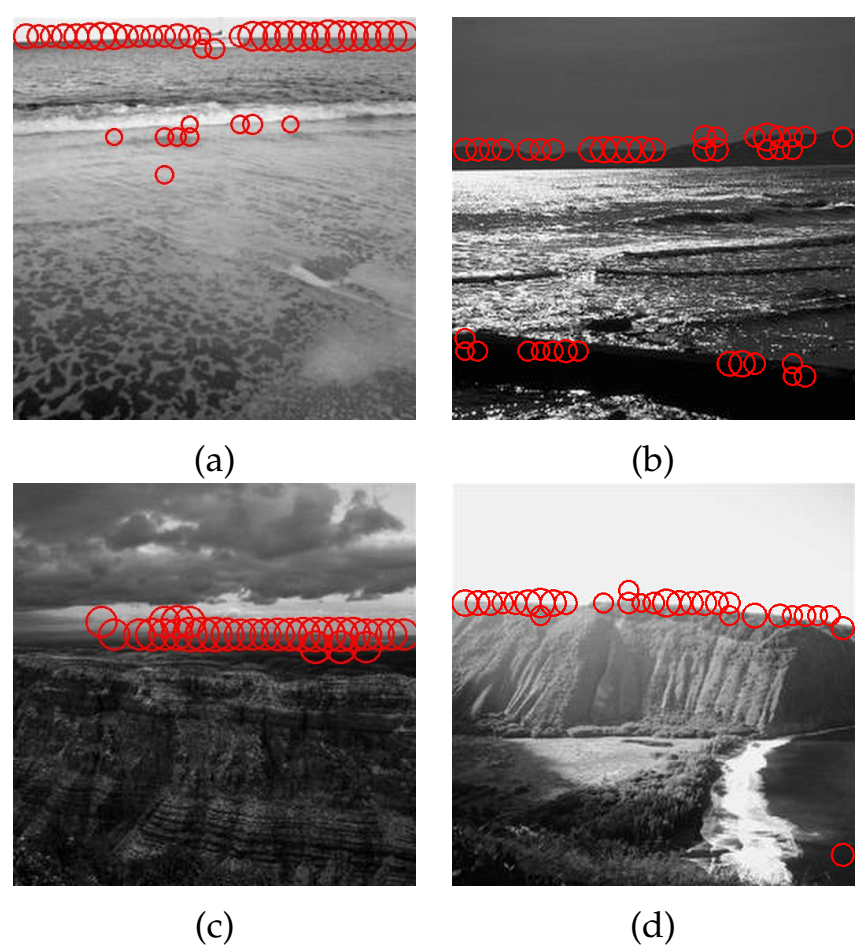

Words Visualization: The use of a group-sparse regularizer allows our method to generate visual words that are shared by only a few categories, thus allowing them to specialize on certain specific visual patterns, increasing their discriminativity. In the following figure, we show activations of a word specialized in multiple categories. This word seems associated to horizontal edge patterns such as the horizon, a pattern shared by categories MITcoast (5a,5b) and MITopencountry (5c,5d).

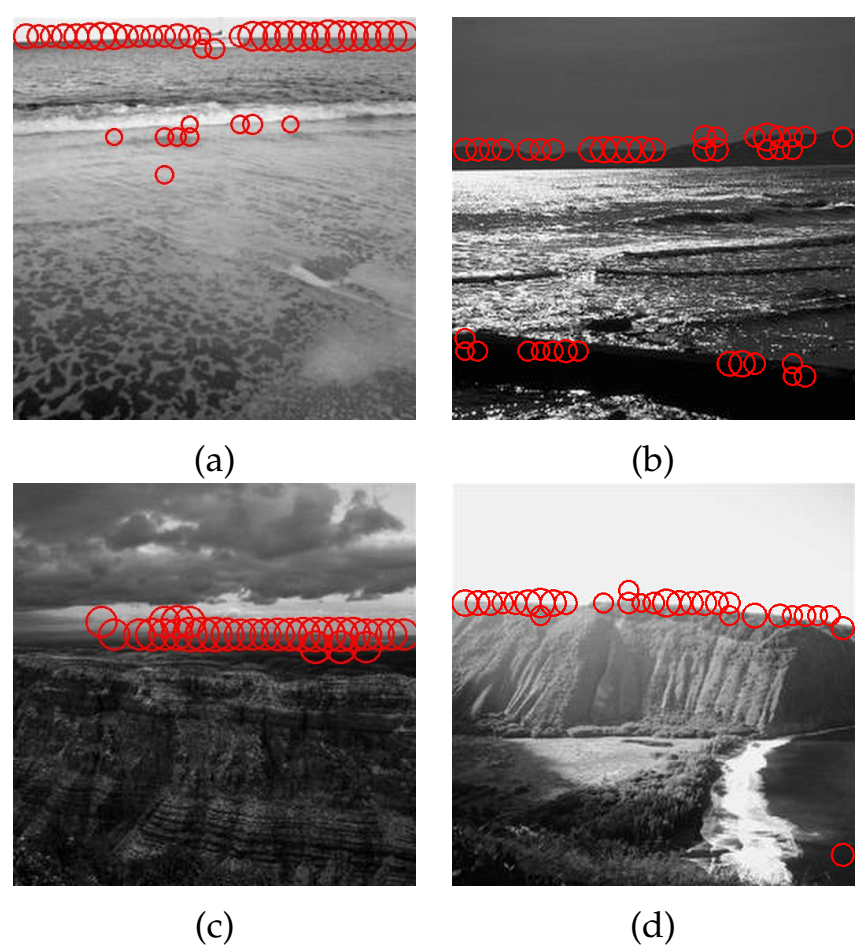

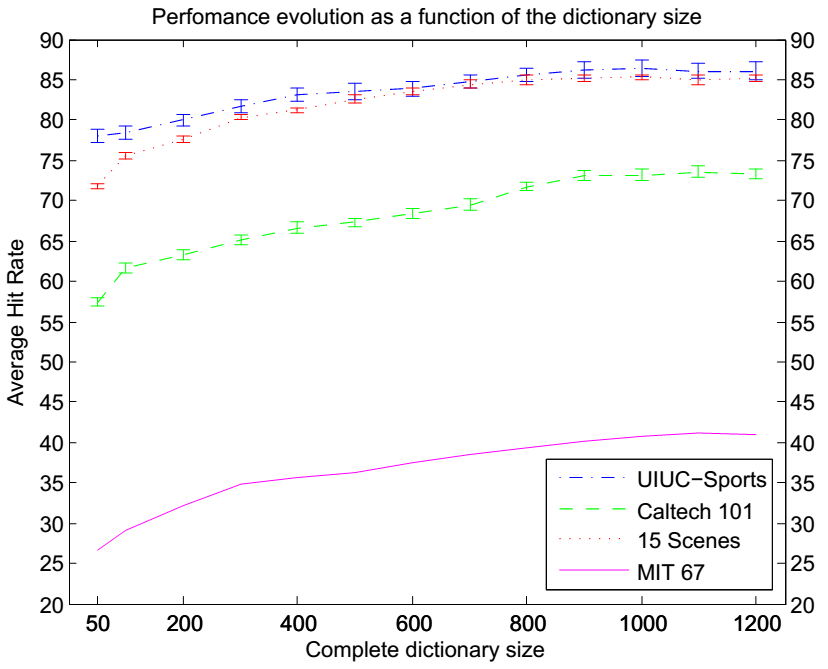

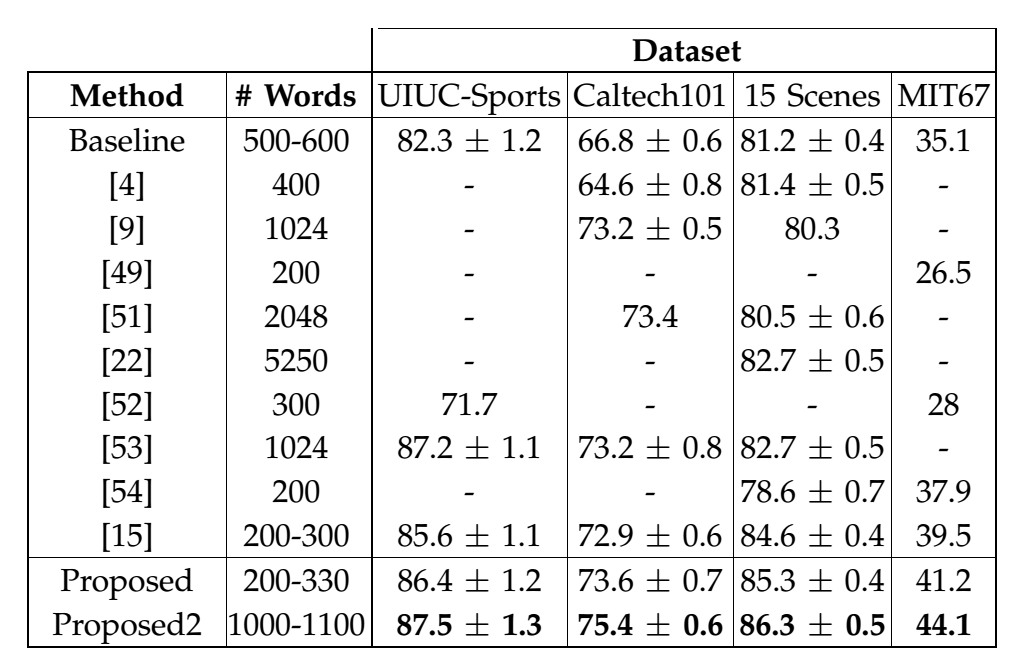

Categorization Performance: When comparing categorization performance with respect to the dictionary size, we notice that the maximum performance is achieved using a dictionary of around 1000-1100 words. However, due to the use of the group-sparse regularizer, the effective usage of words by each class is far less, around 200-300 words, depending on the dataset.

Categorization Performance: When comparing categorization performance with respect to the dictionary size, we notice that the maximum performance is achieved using a dictionary of around 1000-1100 words. However, due to the use of the group-sparse regularizer, the effective usage of words by each class is far less, around 200-300 words, depending on the dataset.

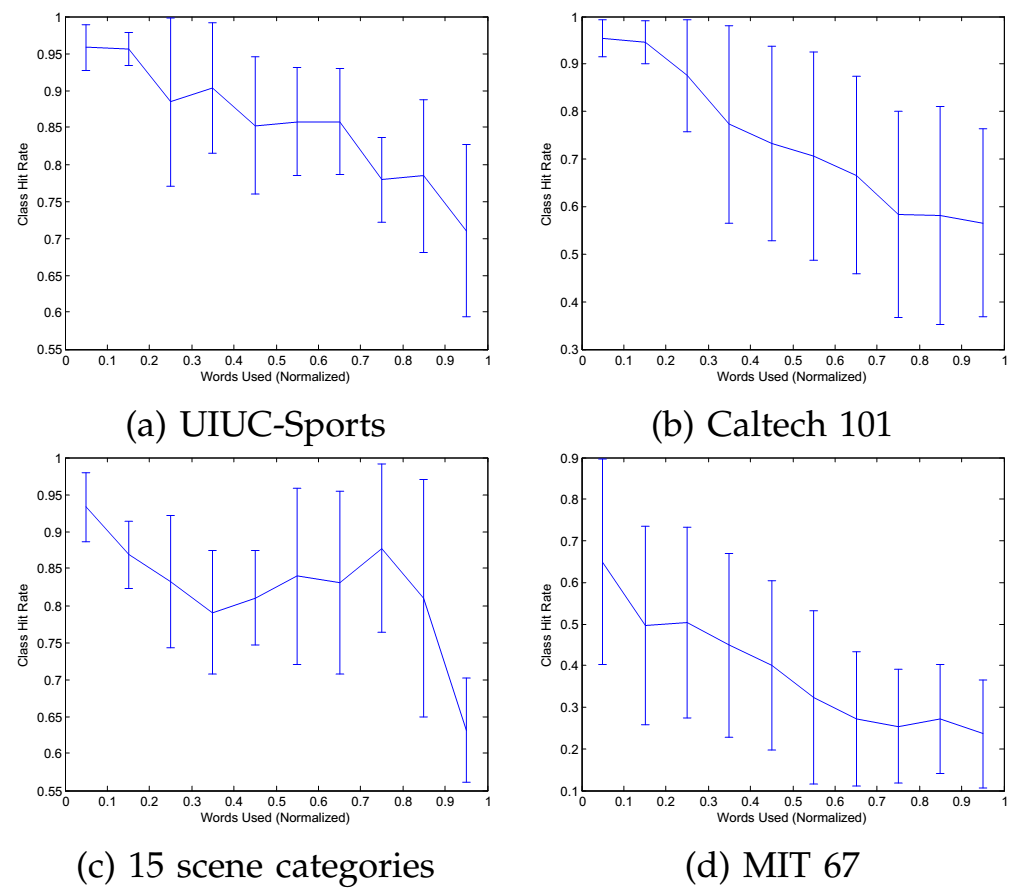

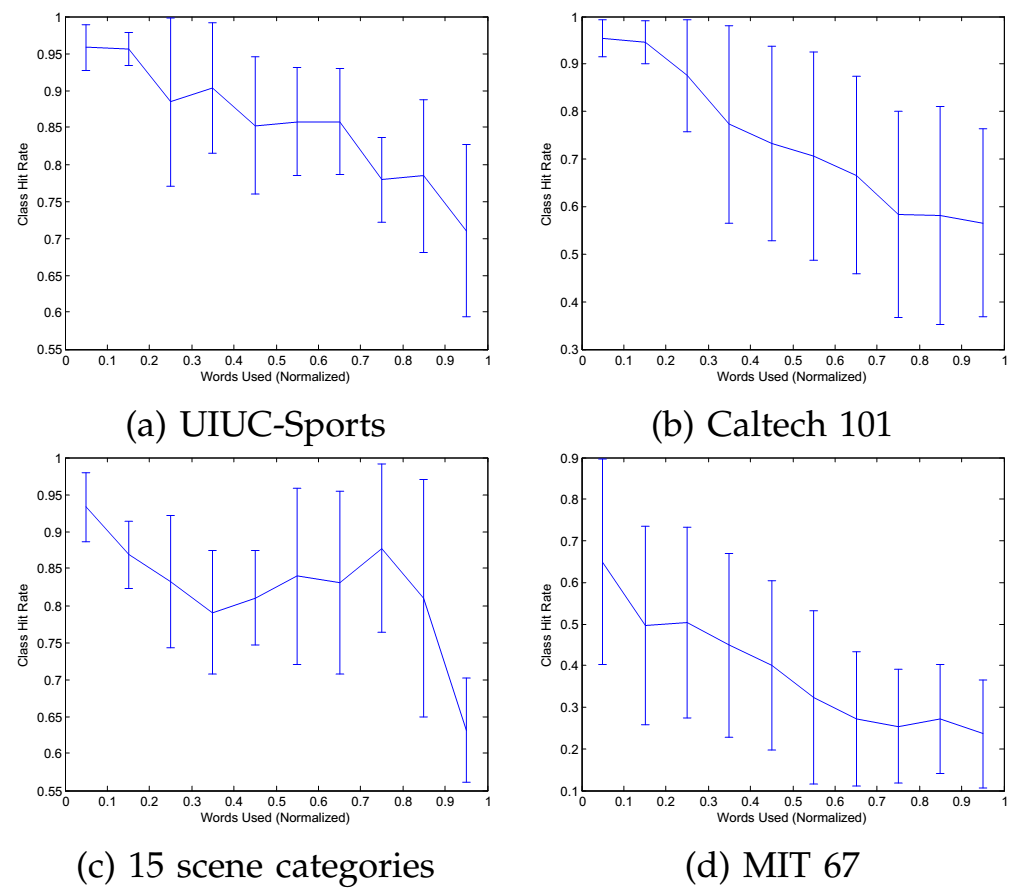

As each category adaptively selects the amount of words it uses, it is possible to study, for each of them, the relation between performance and number of words used. Our results confirm the intuition of a negative correlation, i.e., the easier it is to classify a category (high hit-rate), the fewer words are needed, and vice versa.

As each category adaptively selects the amount of words it uses, it is possible to study, for each of them, the relation between performance and number of words used. Our results confirm the intuition of a negative correlation, i.e., the easier it is to classify a category (high hit-rate), the fewer words are needed, and vice versa.

Although the standard deviation is large in some cases, we can clearly see that categories with higher performance tend to use less words than categories with lower performance, which is the intuitive behavior we expected.

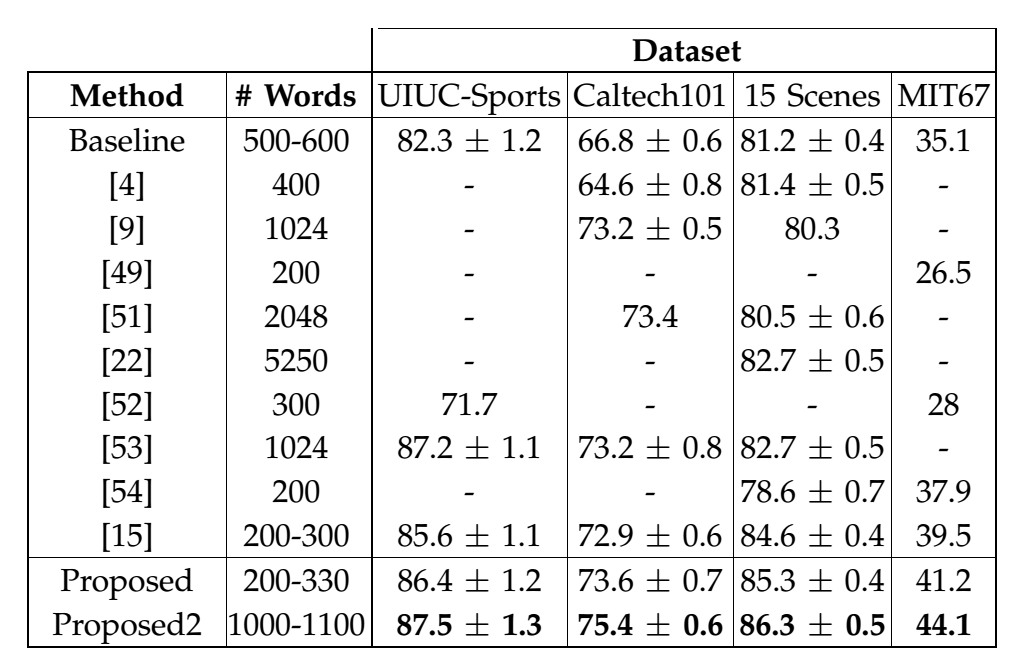

Finally, when comparing the categorization performance of our work with the performance of other competing methods that use a similar patch size to extract the low level features, we observe that we achieve state-of-the-art performance, using a dictionary that is generally an order of magnitude smaller. The reference of the competing methods can be found in (Lobel at al., 2015)

Although the standard deviation is large in some cases, we can clearly see that categories with higher performance tend to use less words than categories with lower performance, which is the intuitive behavior we expected.

Finally, when comparing the categorization performance of our work with the performance of other competing methods that use a similar patch size to extract the low level features, we observe that we achieve state-of-the-art performance, using a dictionary that is generally an order of magnitude smaller. The reference of the competing methods can be found in (Lobel at al., 2015)

Acknowledgements

This work was partially funded by FONDECYT grant 1120720 and NSF grant 11-1218709.

Related Publications

-

H. Lobel, R. Vidal and A. Soto.Learning Shared, Discriminative, and Compact Representations for Visual Recognition.In IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2015.Download: [pdf]

-

H. Lobel, R. Vidal and A. Soto.Hierarchical Joint Max-Margin Learning of Mid and Top Level Representations for Visual Recognition.In International Conference on Computer Vision (ICCV), 2013.

-

H. Lobel, R. Vidal, D. Mery and A. Soto.Joint Dictionary and Classifier Learning for Categorization of Images Using a Max-margin Framework. (Best student paper award)In Pacific-Rim Symposium on Image and Video Technology (PSIVT), 2013.

Feedback

If you have any questions, please email me at: halobel@uc.cl